The weather is getting colder and winter is around the corner, which to me is a clear invitation to cosy up at home and start reading the papers, blogs and books that I had been bookmarking in the summer months.

The AI bubble hasn't popped yet, but AI models, at least the publicly available ones, have not shown the exponential improvements the tech gurus were claiming they would. Nonetheless, the rat race for AI supremacy is an entertaining show to follow these days, and from time to time, there are surprises along the way.

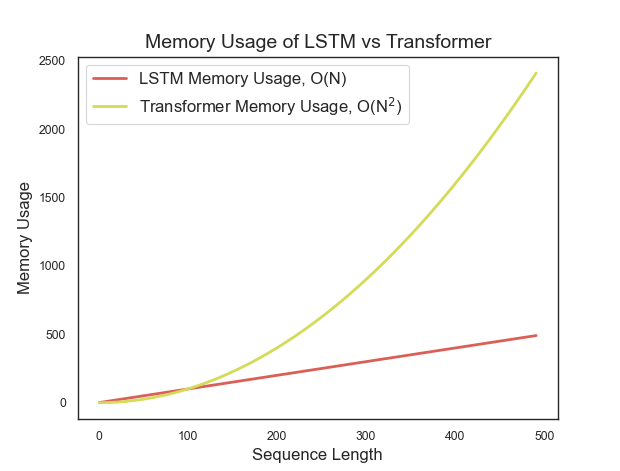

So, circling back to these last couple of years, Transformers have redefined the landscape of deep learning and have become the bread and butter of AI professionals and aficionados alike, particularly in tasks involving sequential data, from language modelling to machine translation. Their ability to process sequences in parallel revolutionized many applications, outperforming traditional models like Recurrent Neural Networks (RNNs) in both accuracy and training efficiency. However, the impressive performance of Transformers comes at a cost: they require significantly more memory and compute, especially as sequence lengths grow.

A couple of weeks ago, I came across a recent study, by some folks at the company BorealisAI, named "Were RNNs All We Needed?". This paper revisits classic RNN architectures such as LSTMs and GRUs to explore whether these models, when adapted to modern training techniques, could still compete with Transformers.

By restructuring RNNs to be fully parallelisable during training, the authors propose minimal versions that retain the efficiency of RNNs for long sequences, sparking a debate over whether the shift to Transformers (and attention) was as essential as we thought.

RNNs vs Attention

RNNs, including Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs), are designed to handle sequential data by maintaining a “memory” of previous inputs through hidden states that are updated at each time step. LSTMs and GRUs introduce gating mechanisms that control the flow of information, helping them to capture long-term dependencies in sequences. The main challenge with RNNs, however, is that they process data sequentially, updating hidden states one step at a time. This dependency on prior steps makes them difficult to parallelize during training, resulting in slower training times and potential issues with gradient vanishing or explosion over long sequences.

Transformers, on the other hand, use a self-attention mechanism that processes entire sequences at once rather than sequentially. The self-attention mechanism allows each token in a sequence to directly “attend” to every other token, capturing dependencies between distant words or data points without relying on a step-by-step approach. This architecture allows Transformers to parallelize computations across tokens, resulting in significantly faster training. However, this design also means that Transformers require substantial memory and computational power, especially as the sequence length grows, due to the quadratic complexity, O(N**2) of the self-attention mechanism.

Moreover, despite the amazing language modelling capabilities of attention mechanisms, transformers still have not shown much potential for prediction. In that sense, if we were to work with biological data, or even, financial data, and more especially, for time-series forecasting, transformers would give us very disappointing results.

There are still plenty of use cases for RNNs. If only they could be run in parallel...

Barebones and parallelizable RNNs are actually quite powerful

That's where BorealisAI comes in. In the paper, they introduce the parallel scan algorithm (also known as prefix scan, developed back in the 1990s). This algorithm enables training without having to use backpropagation and, subsequently, enabling parallelisation.

Without getting into deep technical details, the parallel scan algorithm requires some tweaking on the design of GRUs and LSTMs: (1) removing the dependence on previous hidden states, and (2) removing the range restriction (tanh) on the hidden states. The former has been a bit controversial, but there is now proven models, such as Mamba, that have dropped the dependence on previous states.

The authors from BorealisAI propose revised minimal versions for GRUs and LSTMs, which they call minGRU and minLSTM. These minimal models retain the core functionality of traditional RNNs but are stripped of unnecessary dependencies on hidden states that impede parallel computation. As mentioned, by eliminating the need for backpropagation through time (BPTT), the models become fully parallelisable. In addition, these models use fewer parameters, reducing the overall model size while still achieving comparable or even better performance in various tasks, compared with full-fledged transformer models.

According to their paper, minLSTMs and minGRUs can train 175 times faster than their traditional counterparts for sequences of length 512, significantly improving computational efficiency without sacrificing predictive performance.

These minimal models are tested on some benchmarks that have become standard in the field, such as:

- Selective copying task: this task tests the ability of models to memorize and extract relevant information from noisy input sequences. The minimal models performed competitively, solving the task similarly to state-of-the-art recurrent sequence models like Mamba's S6 model.

- Reinforcement Learning: in experiments with the D4RL benchmark, which involves continuous control tasks in environments like HalfCheetah and Hopper, minLSTMs and minGRUs outperform other recurrent models like Decision S4 and achieve performance competitive with state-of-the-art models like Decision Mamba and Transformers.

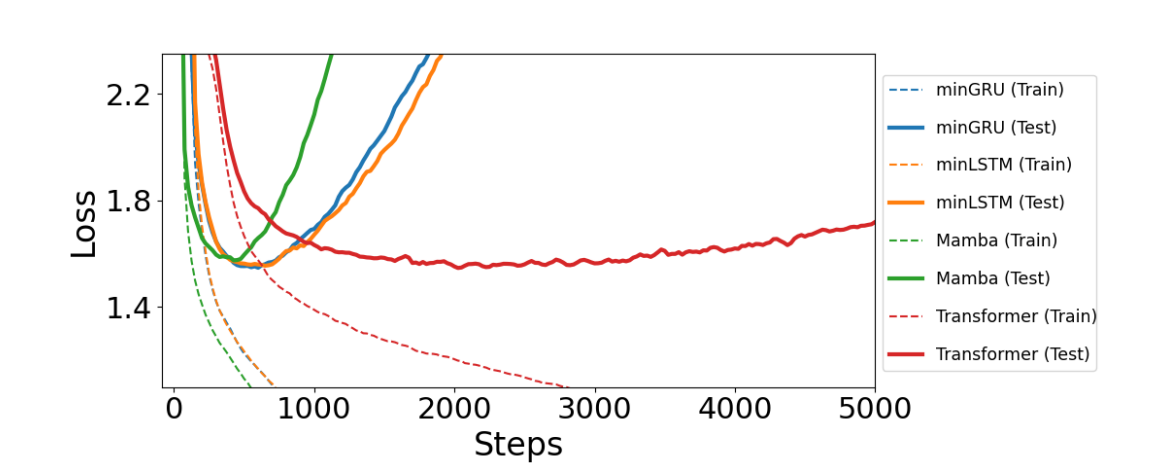

- Language modelling: in a character-level GPT task trained on Shakespeare’s works, minLSTMs and minGRUs perform comparably to Transformers and Mamba in terms of test loss but train significantly faster. This demonstrates the strong empirical performance of the minimal RNN models across a range of sequence tasks.

These minimal models not only achieve similar or better accuracy but also drastically reduce training time, as you can see, for instance, represented by the dashed lines on the language modelling task test:

I was quite hyped when I came around this paper and, being completely honest, part of me really hopes that these minimal models or RNN + parallel scan alternatives will be researched in the upcoming years. Most individual AI users will still benefit from the capabilities of these AI models, and since training takes less computational resources, it would mean the impact of AI on the environment and our national energy grids will lessen.

Now that AI is here to stay, shouldn't we aim to optimise the performance of these models instead of chasing AGI?